UX/UI Published on by Chloé Chassany

Dark patterns, the dark side of nudges

A nudge on the ‘dark’ side, dark patterns are interface design techniques that aim to deceive or manipulate a user into performing an action against their will or interests. Increasingly prevalent on the web, dark patterns are implemented to maximise profits or collect as much personal data as possible.

In ethical design and user experience, dark patterns pose a problem because they constrain the user.

But what exactly are dark patterns, how can they be recognised, and what are their consequences?

The origin of dark patterns

There is no real translation in French for ‘dark patterns’. While a “pattern” in software engineering is a way of improving, stabilising or securing software, dark patterns are ‘dark ways’ of influencing user behaviour.

The term ‘dark patterns’ was coined by Dr Harry Brignull, a user experience specialist who created the website darkpatterns.org in 2010, which became deceptive.design in 2022, sharing ‘tricks used on websites and apps to get us to do things we don’t want to do’.

In addition to being a wealth of information and examples, this site also lists laws and news related to dark patterns.

Types of dark patterns according to Dr Harry Bignull

Prevention of comparison

Users find it difficult to compare product features and prices because they are organised in a complex manner or are difficult to find.

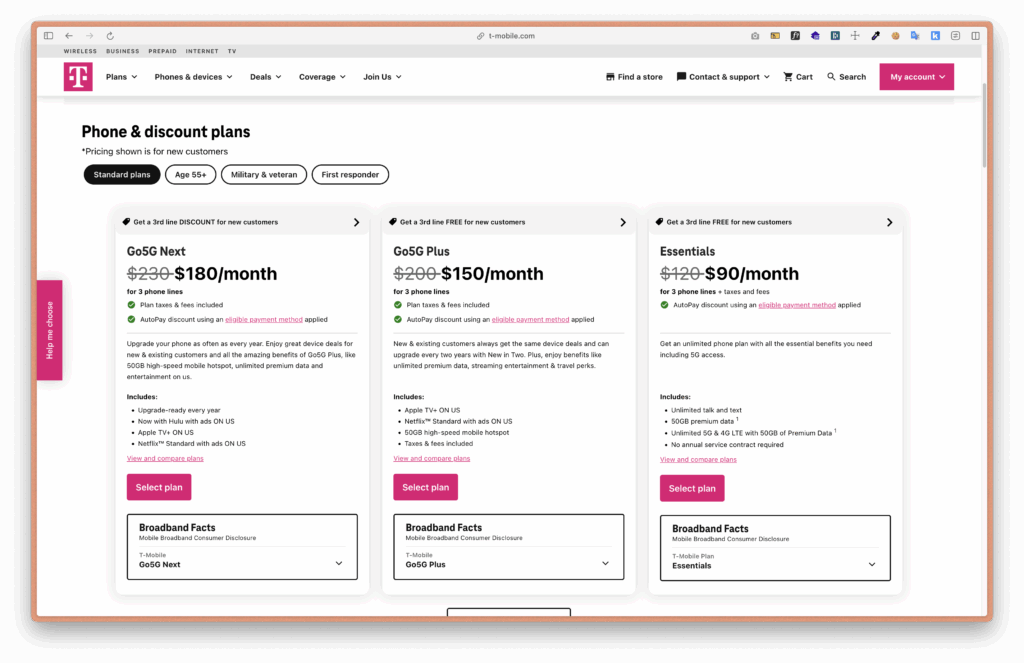

Example: T-Mobile presents its subscriptions from most expensive to least expensive.

Confirmation of shame

The user is manipulated into doing something they would not normally do.

Example: Encourage people to take advantage of an offer by using a single opt-out button label.

Surreptitious advertising

The user believes they are clicking on an interface element or native content when it is actually an advertisement.

Example: Advertisements with fake download buttons on software download sites.

False shortage

The user is prompted to take action (usually a purchase) due to a limited offer or popularity.

Example: Displaying low stock levels and high sales figures over a very short period of time creates a false sense of popularity. Below, Digitec highlights flash offers with limited stock.

False social proof

The user is misled by a large number of testimonials, false testimonials, etc.

Example: Several extensions such as Trustpulse display ‘live’ notifications about site activity (bookings, purchases, etc.).

False emergency

The user is pressured into taking action because they are presented with a false time limit.

Example: Newsletters with a counter indicating the end of a promotion

Forced action

The user wishes to perform an action, but the system requires them to perform another, undesirable action in return.

Example: Requiring a customer to create an account in order to place an order.

Difficult to cancel

After registering very easily, users find it complicated, if not impossible, to unsubscribe.

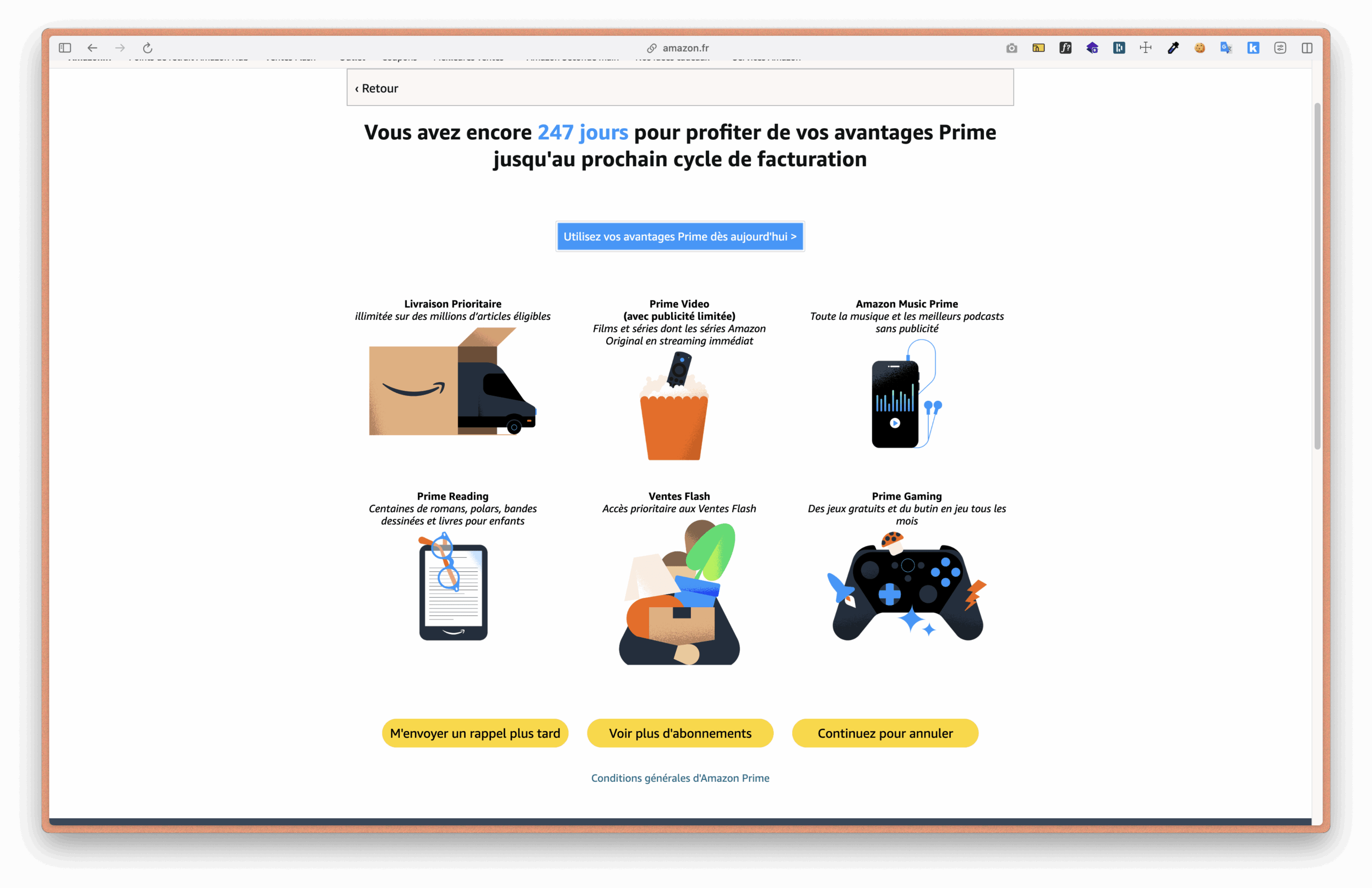

Example: Cancelling an Amazon Prime subscription requires several actions and involves several dissuasive steps.

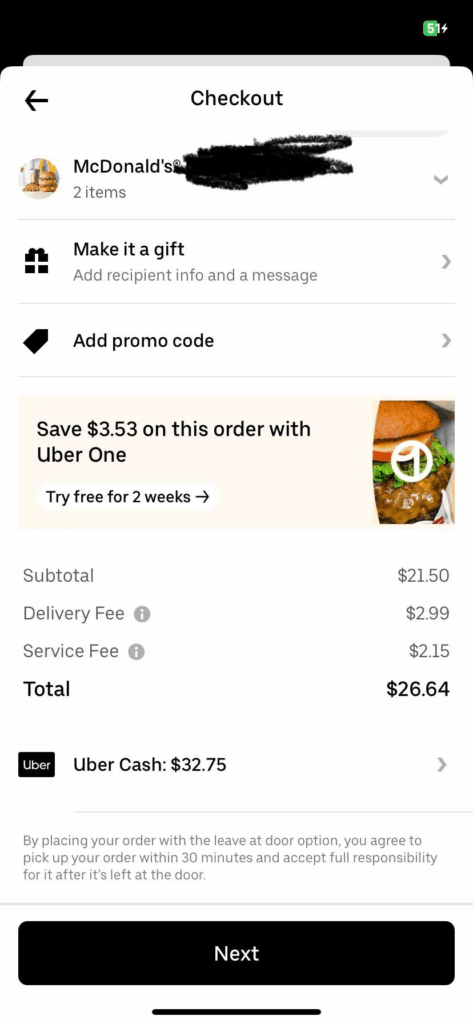

Hidden costs

The user is attracted by a low price, but ends up with unexpected costs after investing effort.

Example: On UberEats, fees are not disclosed when placing an order, but only upon confirmation.

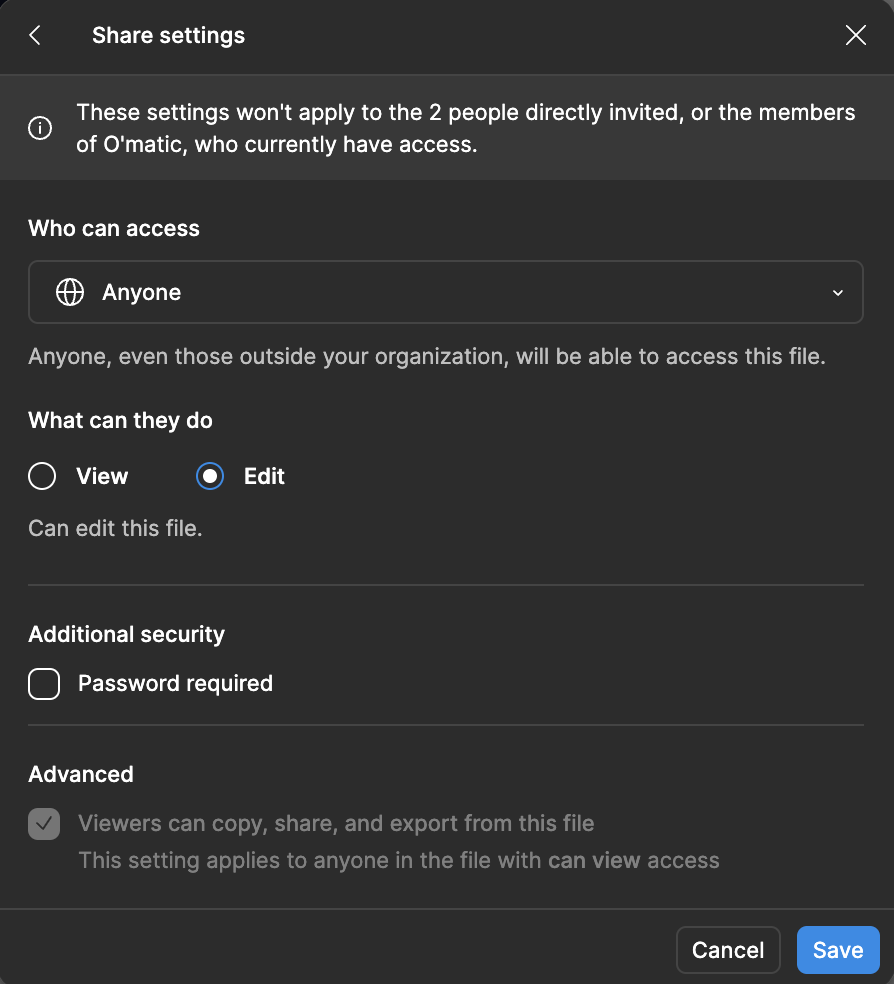

Hidden subscription

The user is unknowingly signed up for a recurring subscription.

Example: On Figma, when a user authorises a guest to share, the former is unknowingly charged for a subscription.

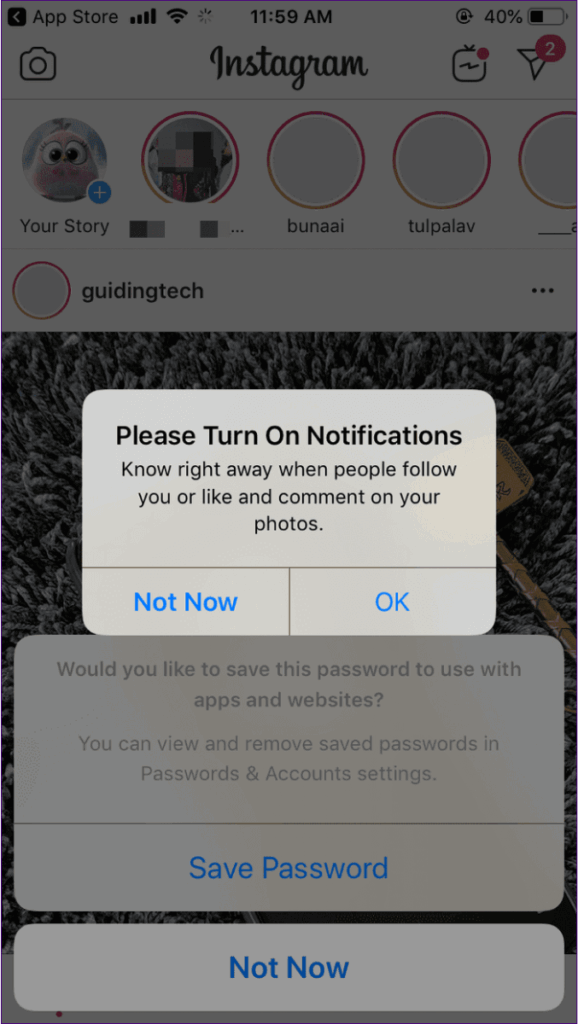

Harassment

The user attempts to perform an action but is constantly interrupted.

Example: Social networks encouraging users to enable notifications each time they open the application (such as Instagram in 2018)

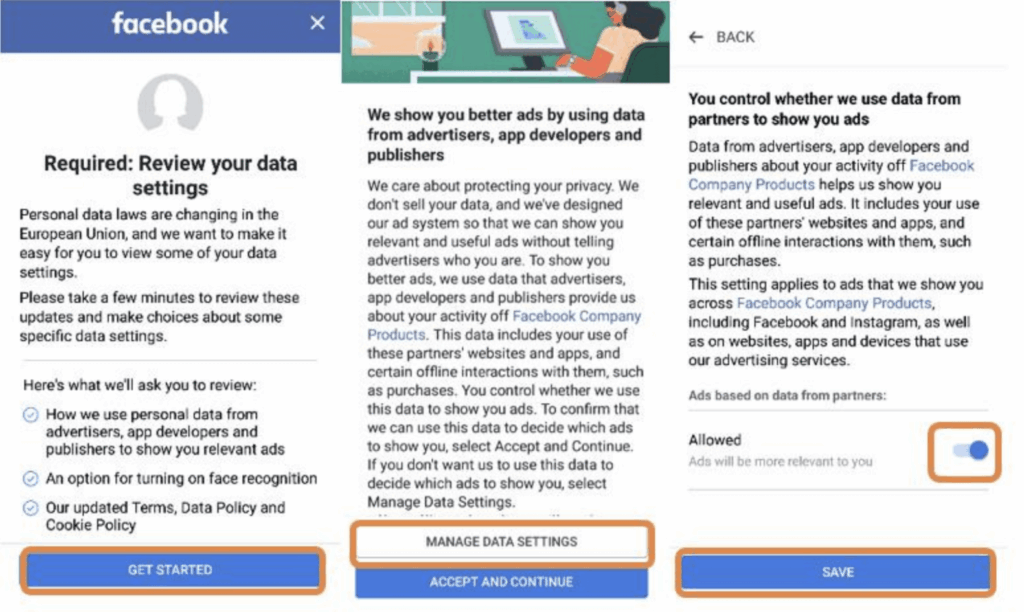

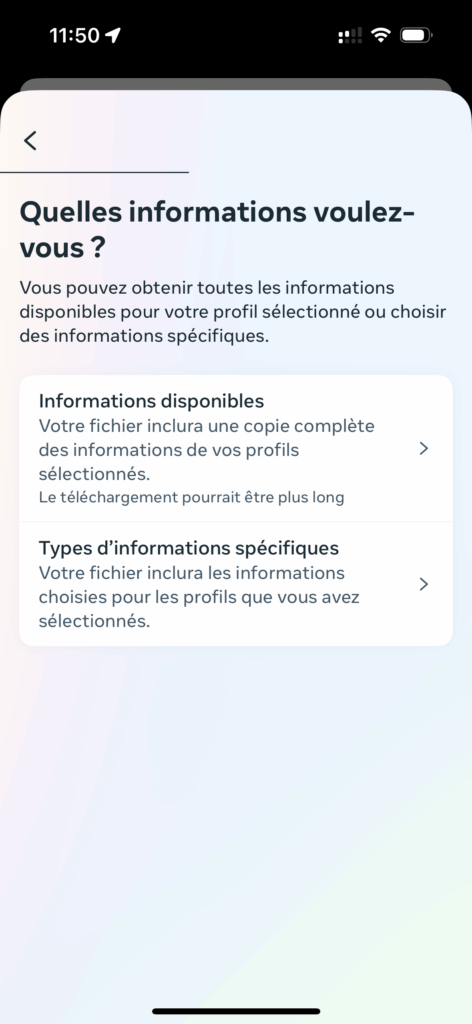

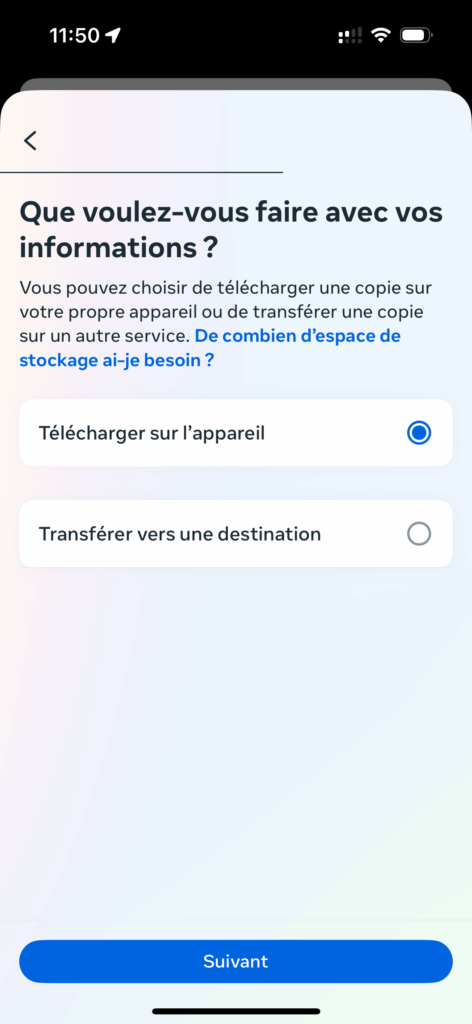

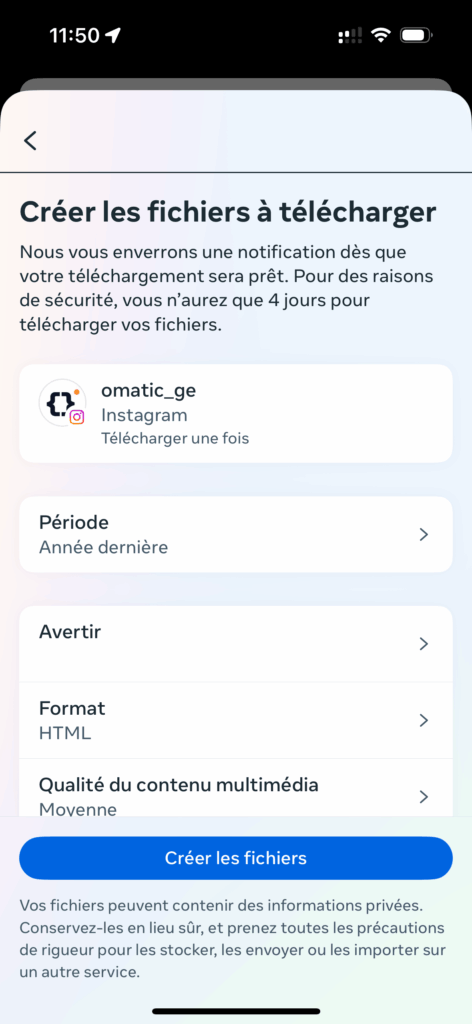

Obstruction

The user faces obstacles in performing their action.

Example: If a user wishes to receive their personal data from Facebook, they are overwhelmed with information and dissuasive steps. (Although it is simpler today than it was in 2018.)

Preselection

An option is selected by default to influence decision-making.

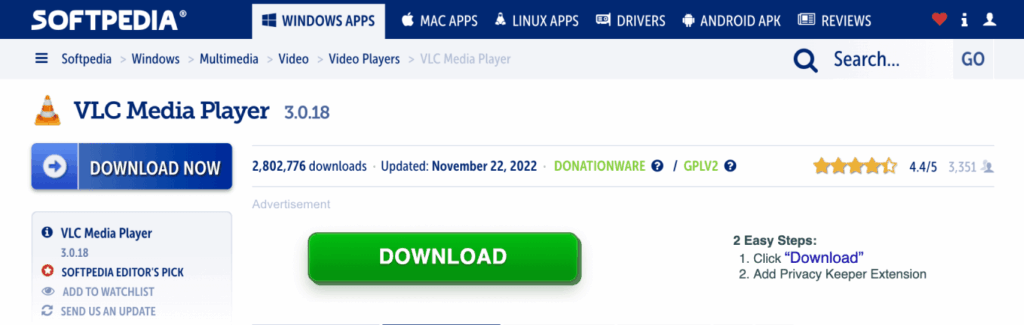

Example: A former employee of a cybercriminal company reveals in an Underscore_ video that certain services allow users to download popular software containing hidden malware. Apart from advanced installation, extensions are added to the computer to track everything a user does on the web in order to offer them increasingly targeted adverts.

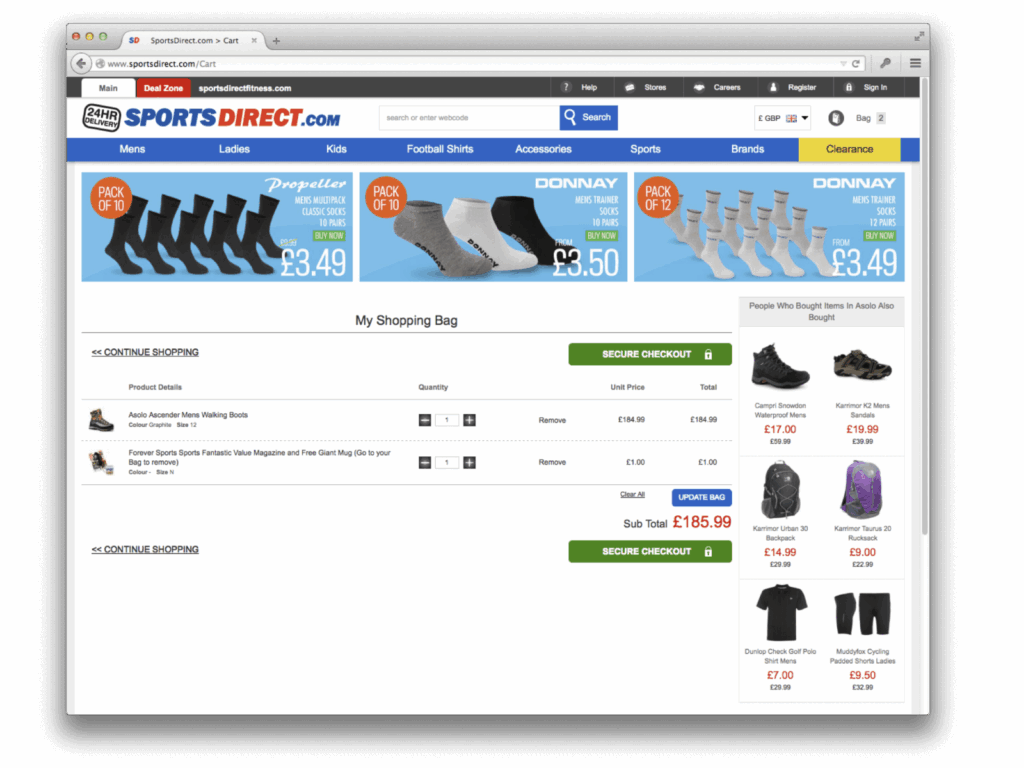

Basting

The user is lured into a transaction under false pretences by having only partial information.

Example: In 2015, the website sportsdirect.com added an unwanted magazine subscription to users’ shopping baskets for an additional £1 with every purchase.

Clever wording

The user is misled by the choice of words.

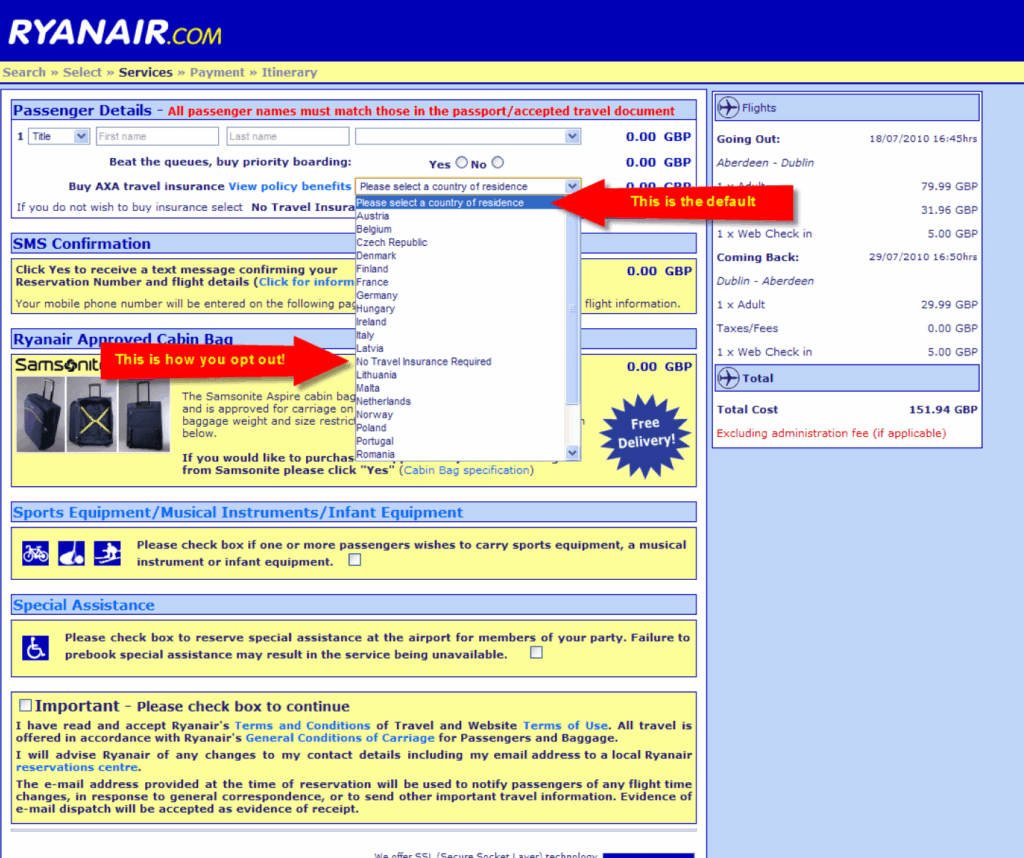

Example: In the early 2010s, Ryanair insidiously added travel insurance by asking travellers to select their country of residence from a drop-down list. To avoid choosing travel insurance, users had to select it from the list. The instructions on how to avoid taking out this insurance were written below and hidden by the drop-down list.

Visual interference

The user expects to see information presented clearly, but it is hidden or disguised.

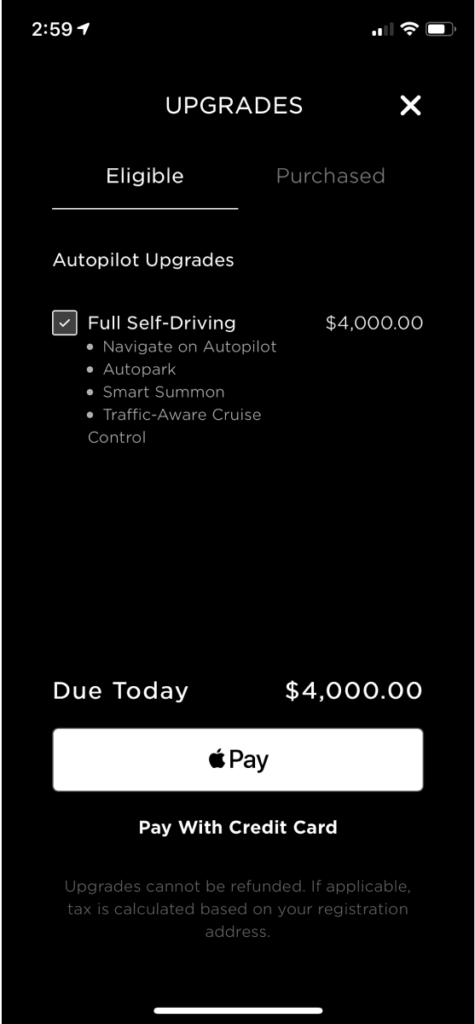

Example: In 2019, it was possible to purchase an upgrade (autopilot, automatic parking, etc.) for your Tesla from the mobile app. Some users who purchased this option by mistake filed complaints, but the notice stating that refunds were not possible was not very visible on the page.

The consequences of dark patterns

Dark patterns are insidious nudges that tend to penalise users for actions that are not convenient for a company.

Many users fall victim to these dark patterns every day, while numerous companies and marketing services invent new ways to distract users from their primary goal in order to achieve their own ends.

The consequences of dark patterns can be more or less serious depending on the degree of the dark pattern. Users may lose confidence or become more suspicious if they observe the same pattern again.

The most well-known example is Facebook, which has been heavily criticised for its use of dark patterns. In 2018, Cambridge Analytica was taken to court for collecting data from millions of Facebook users without their consent. This case caused Facebook to lose the trust of its users and led to the social network becoming the target of government investigations. Facebook was then forced to change its privacy policy in order to better respect its users.